In this mini-project we will create the infrastructure to set up a development environment for writing and testing Spark jobs.

Infrastructure:

Docker compose will provide us with the infrastructure necessary to launch our scripts and verify that they work as intended.

it provides the following services:

Master node

spark-master:

container_name: spark-main

image: docker.io/bitnami/spark:3.5

ports:

- 9090:8080

- 7077:7077

environment:

- SPARK_MODE=master

- SPARK_RPC_AUTHENTICATION_ENABLED=no

- SPARK_RPC_ENCRYPTION_ENABLED=no

- SPARK_LOCAL_STORAGE_ENCRYPTION_ENABLED=no

- SPARK_SSL_ENABLED=no

- SPARK_USER=spark

volumes:

- ./apps:/opt/spark_apps

- ./data:/opt/spark_data

- image: we are using bitnami image.

- ports:

- container port 8080 : used for accessing the web UI, so it's mapped to host port 9090

- container port 7077: used for communicating with the workers, it's mapped to host port 7077

- volumes:

- apps : makes dependencies as jars available for spark such as postgres

- data : that's where the raw test data will reside.

- environment : sets up the necessary environment variables to make the node the master node.

Spark workers

spark-worker-1:

container_name: spark-worker-1

image: docker.io/bitnami/spark:3.5

depends_on:

- spark-master

environment:

- SPARK_MODE=worker

- SPARK_MASTER_URL=spark://spark-master:7077

- SPARK_WORKER_MEMORY=1G

- SPARK_WORKER_CORES=1

- SPARK_RPC_AUTHENTICATION_ENABLED=no

- SPARK_RPC_ENCRYPTION_ENABLED=no

- SPARK_LOCAL_STORAGE_ENCRYPTION_ENABLED=no

- SPARK_SSL_ENABLED=no

- SPARK_USER=spark

volumes:

- ./data:/opt/spark_data

Spark-worker-n : starts a worker node for data processing tasks.

Database

database-1 starts a postgres container for data persistence.

database-1:

container_name: postgres-database-1

image: postgres:15.3-alpine3.18

ports:

- 5432:5432

volumes:

- ./data/postgres:/var/lib/postgresql/data

- ./data:/data_source

environment:

POSTGRES_PASSWORD: <secure-password>

POSTGRES_DB: <database-name>

POSTGRES_USER: <user-name>

utilities

db-admin:

container_name: database-admin-tool

image: adminer

restart: always

depends_on:

- database-1

ports:

- 8080:8080

a utility that enables to connect to the database and query the results of our data processing. we expose 8080 port to be able to access the tool via localhost:8080.

Running the infrastructure locally

You can run the above docker compose file via the command in your terminal.

docker compose up -d --build

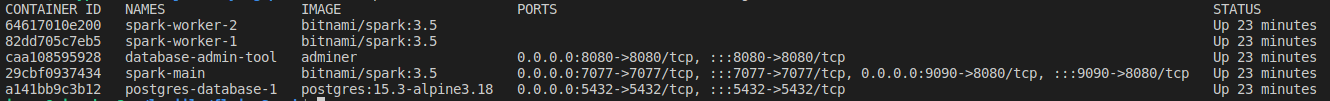

verify that all containers are up via

docker ps

Conclusion:

Now that we have the infrastructure running let's take a step back and make a bash scripts that initializes the environment and prepares it for Spark jobs without manual intervention How to initialize a Spark dev environment